|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

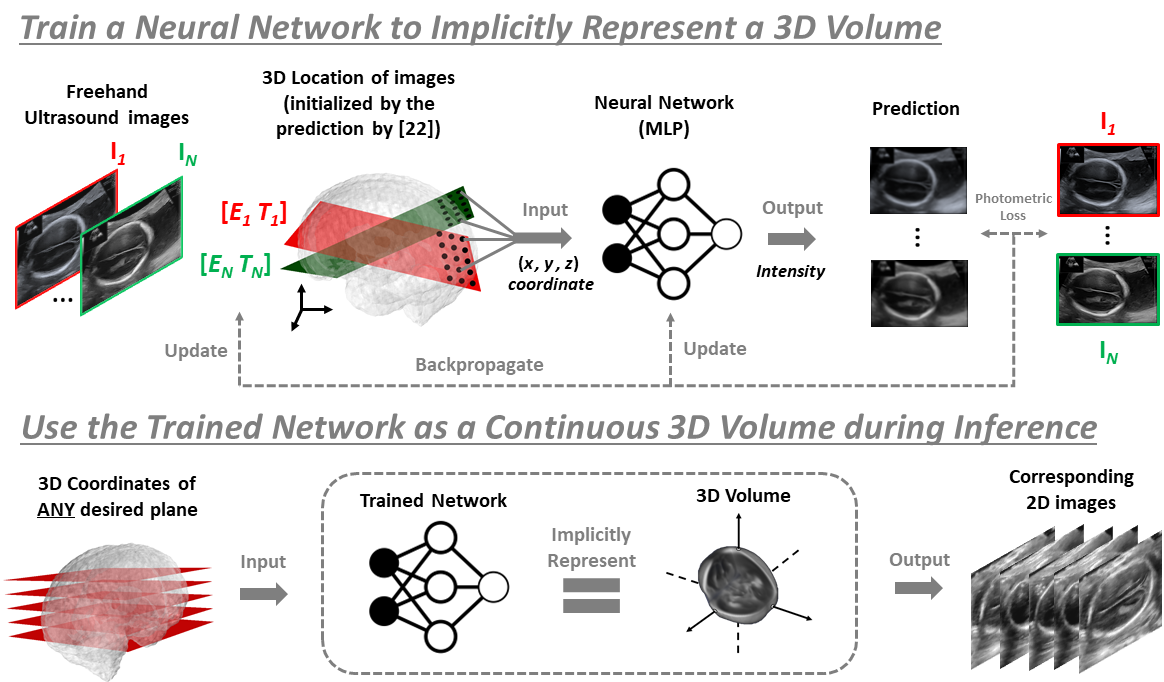

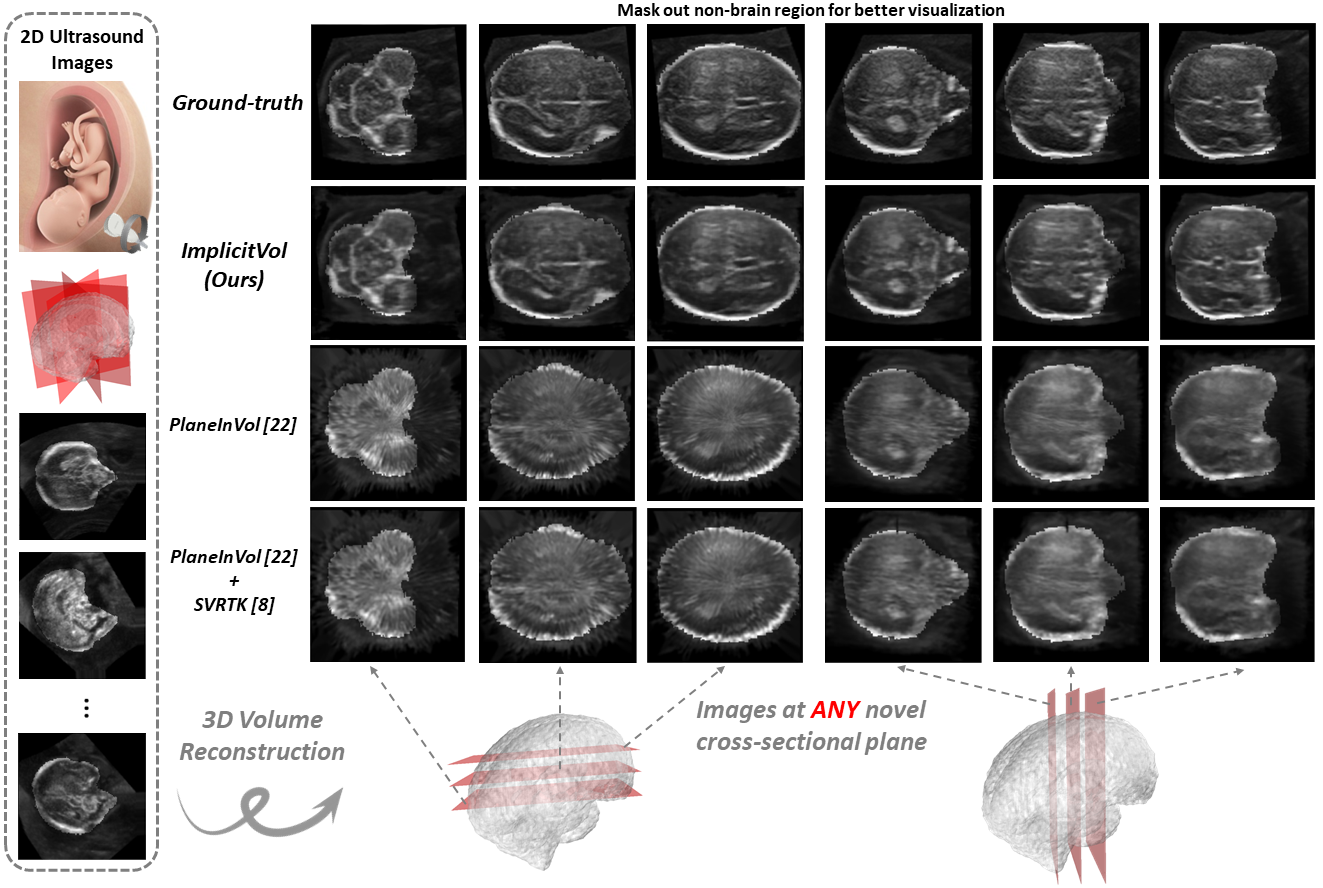

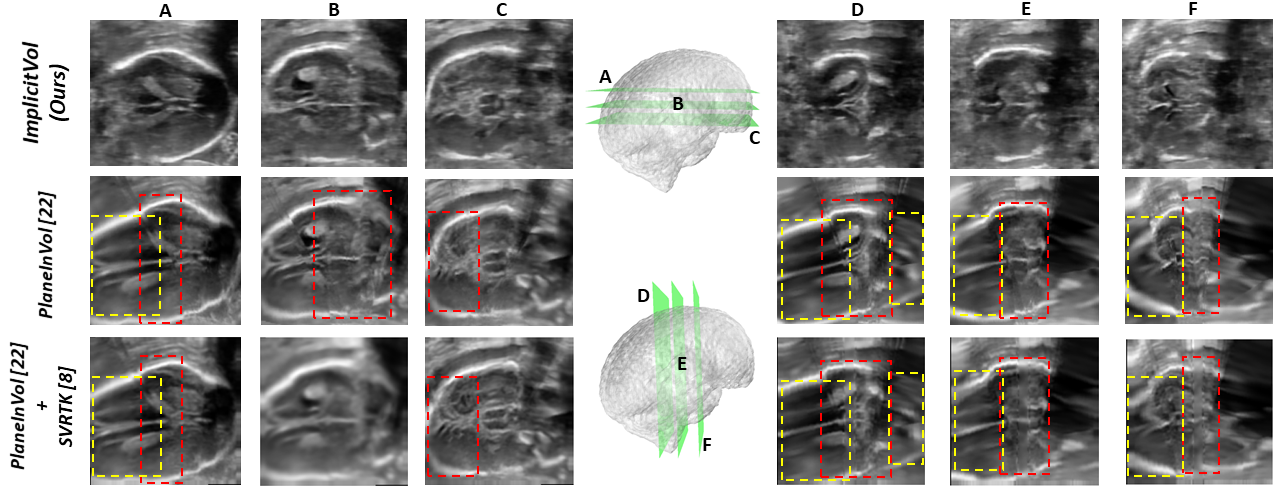

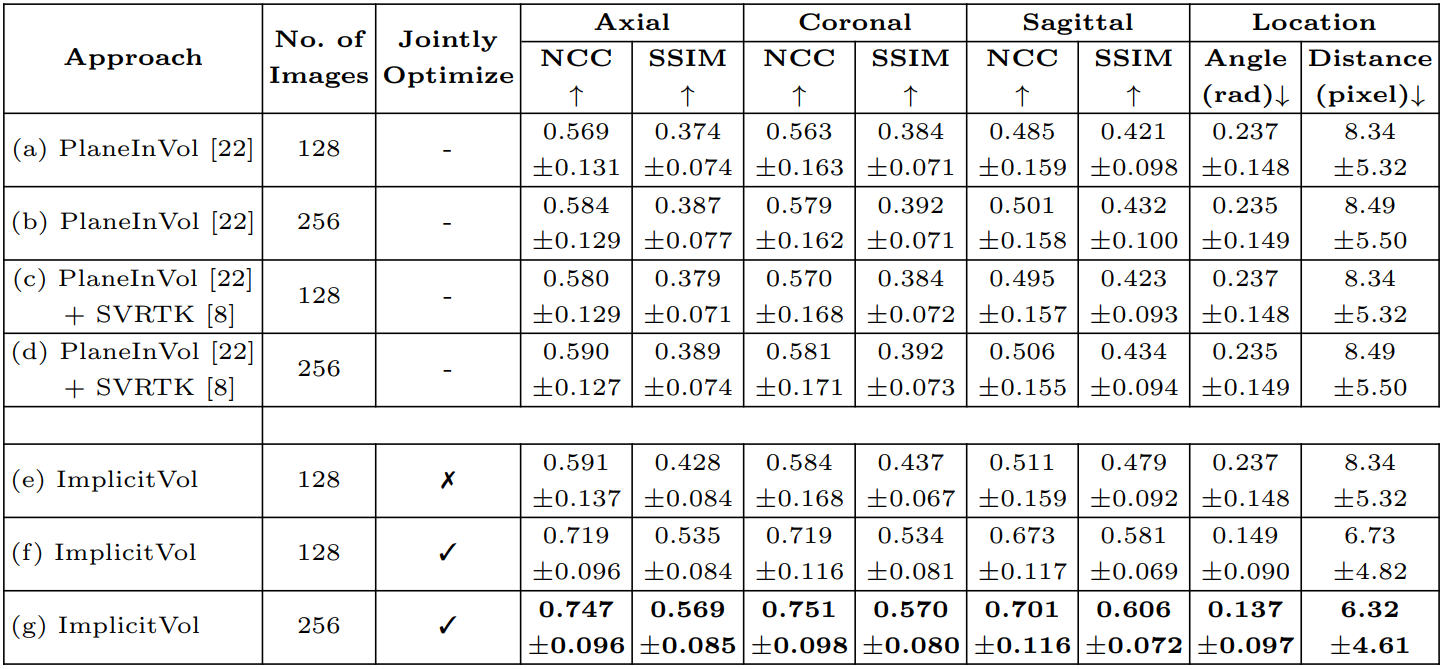

| Three-dimensional (3D) ultrasound imaging has contributed to our understanding of fetal developmental processes by providing rich contextual information of the inherently 3D anatomies. However, its use is limited in clinical settings, due to the high purchasing costs and limited diagnostic practicality. Freehand 2D ultrasound imaging, in contrast, is routinely used in standard obstetric exams, but inherently lacks a 3D representation of the anatomies, which limits its potential for more advanced assessment. Such full representations are challenging to recover even with external tracking devices due to internal fetal movement which is independent from the operator-led trajectory of the probe. Capitalizing on the flexibility offered by freehand 2D ultrasound acquisition, we propose ImplicitVol to reconstruct 3D volumes from non-sensor-tracked 2D ultrasound sweeps. Conventionally, reconstructions are performed on a discrete voxel grid. We, however, employ a deep neural network to represent, for the first time, the reconstructed volume as an implicit function. Specifically, ImplicitVol takes a set of 2D images as input, predicts their locations in 3D space, jointly refines the inferred locations, and learns a full volumetric reconstruction. When testing natively-acquired and volume-sampled 2D ultrasound video sequences collected from different manufacturers, the 3D volumes reconstructed by ImplicitVol show significantly better visual and semantic quality than the existing interpolation-based reconstruction approaches. The inherent continuity of implicit representation also enables ImplicitVol to reconstruct the volume to arbitrarily high resolutions. As formulated, ImplicitVol has the potential to integrate seamlessly into the clinical workflow, while providing richer information for diagnosis and evaluation of the developing brain. |

|

|

|

|

Bibtex

@article{yeung2021implicitvol,

title={ImplicitVol: Sensorless 3D Ultrasound Reconstruction with Deep Implicit Representation},

author={Yeung, Pak-Hei and Hesse, Linde and Aliasi, Moska and Haak, Monique

and Xie, Weidi and Namburete, Ana IL and others},

journal={arXiv preprint arXiv:2109.12108},

year={2021}

}

@article{yeung2024implicitvol,

title={Sensorless Volumetric Reconstruction of Fetal Brain Freehand Ultrasound Scans

with Deep Implicit Representation},

author={Yeung, Pak-Hei and Hesse, Linde and Aliasi, Moska and Haak, Monique

and Xie, Weidi and Namburete, Ana IL and others},

journal={Medical Image Analysis},

volume={94},

pages={103147},

year={2024},

publisher={Elsevier}

}

|

AcknowledgementsThis template of this project webpage was originally made by Phillip Isola and Richard Zhang for a colorful ECCV project; the code can be found here. |